SoundSee – insight with Audio AI

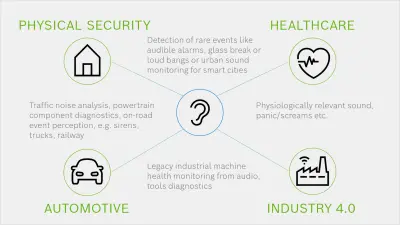

SoundSee uses powerful audio signal processing algorithms built with machine learning to interpret sounds in the environment on an unprecedented scale. The applications – in industry 4.0, healthcare, building technologies and beyond – are limitless.

Podcast about SoundSee

The Bosch Global Podcast From KNOW-HOW to WOW offers initial insights into the topic: This episode is all about sound — more specifically about seeing with sound. Three guests share insights with how they use sound in their daily lives to overcome vastly different challenges.

Giving meaning to sound patterns

Modern sensors may hear better than humans, but they lack the ability to understand sound the way humans do. Bosch Research set out to develop robust, scalable audio signal processing and machine learning algorithms that can understand sound patterns.

Along with human speech, two key classes of sound are environmental sounds and machine sounds. Environmental sounds are all around us – a car passing by, a door closing or glass breaking. Machine sounds originate from machines – engines, tools or motors, to name a few. These sound patterns encode various physical events of interest to be interpreted for practical use. Did the door latch properly when it was closed? Does the fan on a cooling unit sound like it is about to malfunction?

Bosch Research’s SoundSee technology uses Audio AI – powerful audio signal processing algorithms built with machine learning — to give meaning to the sounds it hears. It is also designed to listen and map multiple sound sources in the environment. For example, a SoundSee microphone array on an automated ground vehicle in a factory or warehouse can periodically map the acoustic signatures of various machines and equipment to monitor their operational health.

Bosch Research scientists began developing SoundSee’s audio AI capabilities five years ago at a time when only very few people were exploring the technology from an AI standpoint. It didn’t take long for their research to take off.

Out of this world

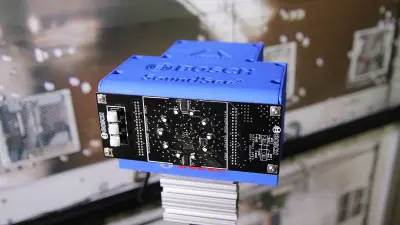

As a part of research mission with NASA, SoundSee was launched to the International Space Station (ISS) in 2019, using microphones mounted on mini robots to record the sound of machinery and equipment aboard the station. Once transmitted to the ground, Bosch Research scientists can evaluate the audio back on Earth and determine whether the sounds correspond to or deviate from expected sound patterns.

Analyzing these deviations with audio AI could help determine whether a machine or some of its components need repair or replacement. Automations like this can improve the station’s operations and productivity. It alleviates the task burden on astronauts so they can redistribute saved time to their research activities. Hear more about SoundSee and its role aboard the station from the former ISS commander and NASA astronaut, Dr. Mike Foale, in this video.

-

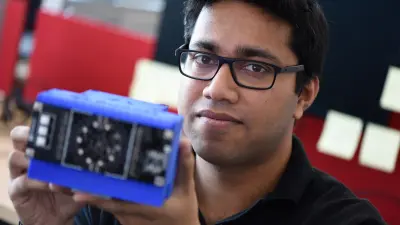

In Pittsburgh’s SoundSee Research Facility, Sam Das and his team will be able to communicate with the International Space Station (ISS) and develop audio signal processing algorithms using their quiet room. -

The quiet room in the Pittsburgh office replicates the International Space Station (ISS) and allows researchers to test their ability to detect sound anomalies.

Audio AI’s limitless potential

But SoundSee goes beyond applications in space. It applies widely across a range of industries and settings thanks to the inexpensive and versatile nature of audio sensing, which fits pretty much anywhere while staying out of sight.

Predictive maintenance in industrial settings is an example where SoundSee can be impactful by augmenting existing sensing capabilities. For instance, in a Hybrid Automatic Voltage Control system, SoundSee could analyze a motor’s sound and predict a malfunction before it occurs – learning from subtle deviations in noise signatures. So it could be an additional layer of monitoring solution for early warning systems that offer incredible value for industry by reducing downtime and saving extensive repair costs.

There are audio AI use cases in healthcare as well. That is why healthcare is another exciting application domain for SoundSee. The human body generates sounds such as the heartbeat or breathing which can contain clinically relevant information. SoundSee could contribute to wholesome, data-driven healthcare decisions by listening to the body. It could also register physiological sound indicators of distress and give audible alarms when a person requires assistance. The ability to detect panic in a person’s voice or a cry for help could make the difference in an emergency.

As an all-round talent, SoundSee’s audio AI could also be integrated as a value-add feature in building technology solutions, particularly in the context of physical safety and security, e.g. by detecting and localizing threat events like alarms or glassbreak – an all-round talent.

Audio AI needs high quality data

Adapting the SoundSee audio AI to a given environment and ensuring robust performance is a challenging task. To address that, high-quality data is needed to train the audio AI. Bosch Research scientists use a combination of classical signal processing and data-driven AI (machine learning) to solve unique challenges associated with each application scenario under consideration. They need a well-defined use case and performance metric to determine what sounds the audio AI will need to be trained on together with appropriate data labels/annotations (human assigned labels and/or other sensory signals as supervisory cues). Both are critical factors that support machine learning.

Recently, the SoundSee team had promising outcomes in a collaborative project involving predictive maintenance with Bosch Powertrain Solutions. The audio AI was trained using a multi-modal dataset that included audio and torque signals before and after artificially-induced damage to six fuel pumps. SoundSee demonstrated that audio AI could be used for inferring other signal modalities (e.g. torque, fuel pressure) with surprisingly high fidelity, which, in turn, strongly correlates with the operating state or malfunctions of the pumps. Such “virtual sensing” capability with Audio AI is a promising direction towards augmenting current sensor data analytics solutions for improving predictive maintenance.

600 million

devices with sound recognition by 2023

Summary

SoundSee interprets the sounds in its environment using audio AI trained with high quality data. Already launched to the international space station for performing research experiments, SoundSee is scalable for a broad range of commercial uses here on Earth such as predictive maintenance, early warning systems, building technologies and data-driven healthcare.

Loading the video requires your consent. If you agree by clicking on the Play icon, the video will load and data will be transmitted to Google as well as information will be accessed and stored by Google on your device. Google may be able to link these data or information with existing data.

Even more details, for instance about the challenges in the development of SoundSee, can be found in this interview with Bosch researcher Sam Das.