Simultaneous localization and mapping — helping robots find their way

Bosch Research Blog | Posted by Sebastian Scherer, Peter Biber, Gerhard Kurz, 2024-04-22

To navigate in a certain environment autonomously, mobile robots — from robotic vacuum cleaners to driverless shuttle buses — need two key things, namely an accurate map of their surroundings and information about their own position on that map.

To capture both sets of information at the same time, robots use a technique called simultaneous localization and mapping (SLAM). Since this technology plays a key role for many Bosch products, we answer the most important questions about it here.

What is SLAM?

Simultaneous localization and mapping is one of the key problems for mobile robots.

A mobile robot may need to map its initially completely unfamiliar environment with the help of its sensors and, at the same time, determine its own position on the map it is creating. The map and localization data that the robot generates using SLAM are an important basis for navigation but may be also used for other purposes.

One example is the planning of movements: the map serves as a basis to find a collision-free path from the starting point to the desired destination. To follow this path in a controlled manner, the motion controller of a robot needs to estimate its position precisely and reliably at all times.

Depending on the main type of sensor the SLAM system uses, we also call this “visual SLAM” or “LiDAR SLAM,” for example. When a combination of different sensors is used, we refer to it as “multimodal SLAM.” Furthermore, almost every robot uses additional sensors such as odometry or inertial sensors.

Why is SLAM of interest for Bosch?

As a basic technology, SLAM is used in almost every mobile robot and, needless to say, this also includes Bosch products such as the ACTIVE Shuttle. With the ROKIT Locator, there is even a SLAM software component available from Bosch Rexroth, which has its roots in Bosch Research.

Beyond that, SLAM also enables many other applications: today’s virtual- and augmented-reality headsets rely on visual SLAM for inside-out tracking. Mobile mapping systems based on LiDAR SLAM replace unwieldy terrestrial laser scanners. Finally, SLAM is also an important core technology for many automotive applications.

How is Bosch Research engaged in the topic of SLAM?

As a fundamental robotic technology, SLAM has been a focal point of research at Bosch since the inception of robotics research. The remarkable achievements of the SLAM pipeline developed at Bosch Research can be traced back to the collaborative efforts with Bosch Engineering GmbH (BEG) in 2016. At that time, BEG was seeking a dependable and precise localization solution for the intralogistics robot prototype “Autobod,” which was the predecessor of today’s ACTIVE Shuttle shown in the picture above. Through numerous collaborative tests and data recording sessions conducted across various facilities, we were able to gather the necessary data to identify the optimal technological options for LiDAR SLAM. As development progressed, our SLAM portfolio expanded to encompass a diverse range of robots and use cases, ranging from compact consumer robots to large-scale automated container transport vehicles.

Today, the team has developed a versatile, robust, and efficient SLAM pipeline for LiDAR (2D and 3D), radar, and visual SLAM. And while the team is constantly working on making the system more accurate and supporting more applications, there are also two research topics that are more orthogonal to that: lifelong SLAM and semantic SLAM.

Lifelong SLAM

SLAM algorithms were initially developed in lab environments that were limited in scope and not subject to changes. To use SLAM in real-world use cases, additional challenges need to be overcome. In particular, a robot may spend a long time inside an environment, revisiting the same locations over and over again.

Over time, the environment may change, for example, in an outdoor setting: parked cars may change their location, plants may grow, and different seasons may affect how an environment looks. One key aspect of lifelong SLAM is to refresh the map in order to reflect changes of the environment such that the map stays up to date.

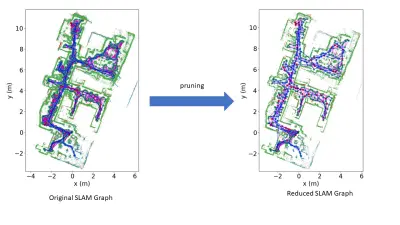

A big challenge in lifelong SLAM is the amount of collected data. If a robot traverses an environment for hours, days, weeks, moths, or even years, huge amounts of measurements are collected. Storing all these measurements and incorporating them into the map is impractical due to limitations in the memory and computing power available. Thus, the lifelong SLAM system has to carefully remove old and redundant data, while making sure that no important information is lost. For this purpose, we employ a pruning algorithm that removes data in areas of the map where the SLAM graph is very dense.

Another crucial aspect of lifelong SLAM is failure handling, especially when the robot encounters the "kidnapped robot problem," for example, if it was moved while turned off or due to sensor occlusions. In such a case, the robot needs to be able to relocalize inside its map and continue performing SLAM once the relocalization succeeds. This can be especially challenging if different parts of the environment have a similar appearance, for example multiple identical shelves in a warehouse. To handle such cases, we first create a new map when the robot is lost and merge it later with the previous map once we have collected enough information to relocalize reliably.

Semantic SLAM

Semantic SLAM is the combination of deep neural networks (DNNs) and SLAM, with the aim of creating a semantic scene understanding. While a classical geometric map tells the robot about obstacles and free space, a semantic map can additionally contain a list of relevant objects with information about their precise positions and extents, so the robot can interact with them.

How this can work is illustrated in the figure above: given a stream of sensor data, geometric SLAM accurately tracks the sensor pose and creates a geometric map, for example containing point clouds. Semantic SLAM can additionally be enabled, assuming there is a DNN that can perform object detection, panoptic segmentation, or both.

Detected objects are clustered and merged into hypotheses, which are in turn tracked and turned into landmarks that have their pose and extents optimized within the SLAM graph optimization. The result is a list of robustly and accurately mapped objects that can be used as navigation goals, among other things.

Panoptic segmentation provides per-pixel semantic and instance labels that can in turn be transferred to the point cloud of a camera or LiDAR sensor. We employ two levels of label voting, both on a local and a global scale, to end up with a consistent panoptic point cloud map. This can be used as an alternative way to extract relevant objects without an explicit detector or to selectively remove or highlight obstacles of certain types.

Currently, at least two major challenges remain: one is making these systems generalize to a wide range of domains with often limited access to training data. A specific factory floor can contain quite different objects compared to the data available during training, a problem where foundation models can help. Making these systems work efficiently on affordable hardware is a second major challenge — and we do not only trust in Moore’s law to solve this.

What are your thoughts on this topic?

Please feel free to share them or to contact us directly.

Author 1

Sebastian Scherer

Sebastian is a senior expert for multimodal SLAM and currently leads the Semantic and Lifelong SLAM research activity at Bosch Research. He joined Bosch in 2015 after studying computer science at the University of Tübingen and the University of Waterloo and earning his PhD in visual SLAM for micro aerial vehicles from the University of Tübingen. After two years of working on subsea robotics and excavator automation at Bosch, he returned to the topic of SLAM in 2017. His expertise lies in SLAM based on a wide range of diverse sensors (cameras, radar, LiDAR, and inertial sensors).

Author 2

Peter Biber

Peter Biber is a senior expert for robot navigation at Bosch Corporate Research. His research interest is to enable mobile robots to fulfill useful tasks in the real world. He joined Bosch in 2006 and, since then, has made many contributions to Bosch Robotics. These include the concept and implementation of the autonomous lawnmower “Indego” navigation system, the navigation system for the agricultural robot Bonirob, and Bosch Research’s SLAM pipeline and its application to the intralogistics robot Autobod and the people mover “Campus Shuttle,” among others. Before, he earned his PhD at the University of Tübingen, Institute for Computer Graphics, with a thesis on the long-term operation of mobile robots in dynamic environments.

Author 3

Gerhard Kurz

Gerhard Kurz studied computer science at the Karlsruhe Institute of Technology (KIT), obtaining his PhD in robotics in 2015, and continued his work as a postdoc at KIT. Afterwards, he joined Bosch Corporate Research in 2018 and became part of the SLAM team. Recently, his research interests include lifelong SLAM and 3D SLAM.

Further Information

The following resources provide a more in-depth understanding of the investigated approaches.

Recent Publications

[ii] Detecting Invalid Map Merges in Lifelong SLAM

[iii] Geometry-based Graph Pruning for Lifelong SLAM

[iv] When Geometry is not Enough: Using Reflector Markers in Lidar SLAM

[v] Better Lost in Transition Than Lost in Space: SLAM State Machine

[vi] Plug-and-Play SLAM: A Unified SLAM Architecture for Modularity and Ease of Use

[vii] SGRec3D: Self-Supervised 3D Scene Graph Learning via Object-Level Scene Reconstruction

[viii] Lang3DSG: Language-based contrastive pre-training for 3D Scene Graph prediction