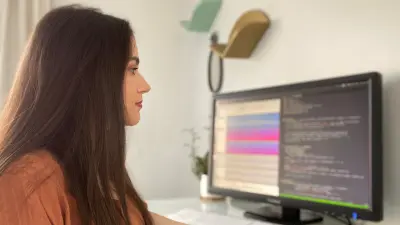

Dr. Shabnam Ghaffarzadegan

Senior research scientist in Artificial Intelligence, audio analytics

My primary research interests include the areas of human-machine collaboration, relating to audio, language, and cognitive processing. In my research, I leverage audio signal processing with domain-specific machine learning solutions. Specifically, I develop advanced audio scene classification and audio event detection solutions. The goal of my work is to equip machines with the knowledge and understanding of audio and speech from the environment, in a similar way to humans. The results of my work are used to enhance machine intelligence and provide alternative human-machine interaction.

Please tell us what fascinates you most about research.

Research gives me the opportunity to explore the unknown and learn new things at every stage. Every project shows a new perspective and new challenges in the field that I have worked in for a long time. The possibility of turning mistakes into a learning experience is also very valuable for me.

What makes research done at Bosch so special?

For me, the essential factor is the opportunity to conduct core research that I am passionate about while having a direct impact on product and everyday life. The idea that my research is not just limited to papers and has a real impact is very valuable. Another exciting factor is the multi-disciplinary teams at Bosch, which bring new insight into each project from different fields and perspectives. Also, since Bosch is a multi-cultural company, we have the opportunity to understand people’s needs in different parts of the world and their acceptance of a new technology. This information lets us curate our products to be useful worldwide.

What research topics are you currently working on at Bosch?

The focus of my research at Bosch is leveraging domain-specific audio and speech technologies with general Artificial Intelligence concepts to develop a “Smart Ear” for machines. I incorporate audio perception into the AI systems to help them understand the environment and navigate better in the real world.

What are the biggest scientific challenges in your field of research?

There are many challenges in the field of audio analytics, including

1) Large variance within each audio category and its context such as hardware, noise condition and acoustic environment. Using different microphones to capture the sound, being at a party, in a quiet house, or on a street, being in a small room or in a conference hall can all bring so many challenges to our systems. Our goal is to develop systems that can withstand the environmental and contextual variations;

2) Unlimited audio vocabulary in the real world, which makes it impossible to predict the lexicon of a given task. Unlike spoken words that use a limited set of alphabets, variations in environmental sounds are unlimited. Just think about the different sounds you hear every day. As a result, it is impossible to teach the AI system all the possible sounds in the world. We need to come up with a smarter AI agent that knows when it doesn’t know something;

3) Limited availability of annotated data due to the unlimited vocabulary and contextual variations essential for deep learning solutions;

4) Users’ privacy concerns about having a system listening to them continuously. As researchers, we need to ensure users’ privacy, limit the possible attacks that could compromise our AI systems, and be transparent about the information we use from users.

How do the results of your research become part of solutions "Invented for life"?

Effective machine audio perception, along with other modalities such as vision and natural language, can make the next generation of smart life technology a reality. For example, our technology can be used as a security system to detect glass breaking, a smoke alarm, a baby crying, or a dog barking, and alert the user. It can teach the smart speakers not to interrupt a human if they are talking or if there are other loud noises in the environment. Last but not least, audio perception can help the automobile understand the environment, e.g. detecting police/emergency services passing by and take proper action.

Curriculum vitae

2013-2016

EE Ph.D. graduate, automatic speech recognition, human-machine systems, The University of Texas at Dallas, Richardson (USA)

2015

Research intern, automatic scoring of non-native spontaneous speech, Educational Testing Service (ETS), Princeton (USA)

2009-2012

EE MS graduate, blind audio source separation and localization, Amirkabir University of Technology (Iran)

Selected publications

Y Sun et al. (2020)

- Yiwei Sun, Shabnam Ghaffarzadegan

- 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)

A Salekin et al. (2019)

- Asif Salekin, Shabnam Ghaffarzadegan, Zhe Feng, John Stankovic

B Kim et al. (2019)

- Bongjun Kim, Shabnam Ghaffarzadegan

- 2019 27th European Signal Processing Conference (EUSIPCO)

S Ghaffarzadegan (2018)

- Shabnam Ghaffarzadegan

- Proc AAAI Wrkshp Artificial Intelligence Applied to Assistive Technologies and Smart Environments

Get in touch with me

Dr. Shabnam Ghaffarzadegan

Senior research scientist in Artificial Intelligence, audio analytics