How to increase robustness of AI perception for safety-critical domains

Bosch Research Blog | Post by Jan Hendrik Metzen, 2021-02-25

Autonomous systems such as future cars or robots need a robust perception of their environment in order to act safely. However, a malevolent actor, a so-called adversary, can consistently fool current systems by placing carefully crafted physical artifacts in a scene. Such physical artifacts can be either universal adversarial patches (where the adversary carefully choses a pattern that it prints out and places in a scene) or semi-transparent adversarial camera stickers on the camera lens. This post outlines Meta Adversarial Training (MAT), an approach for considerably increasing perception robustness against such adversarial attacks in the physical world.

AI perception is vulnerable to physical-world adversarial attacks

Deep learning (DL) has revolutionized environment perception for artificial autonomous systems such as cars or robots. It is used, for instance, for detecting objects such as pedestrians, vehicles, traffic lights, and traffic signs or for segmenting the drivable area in a scene. Deep neural networks are increasingly embedded into sensors such as the Bosch Multi-Purpose Camera, enabling future applications involving video-based driver assistance systems or highly automated driving.

However, this success brings with it novel challenges, since a widespread adoption of deep learning in such safety-critical domains also increases the risk that malevolent actors (“adversaries”) try to fool the perception system. This can have potentially disastrous consequences, such as not detecting a pedestrian crossing a street and subsequently causing an accident. Because of this, the Bosch Multi-Purpose Camera relies on additional processing paths such as optical flow and structure from motion (“multipath approach”) to mitigate failure modes of DL-based perception and ensure safety. However, increasing the availability of the system and novel functions that rely more strongly on DL-based perception, such as traffic-light/-sign classification, will require DL to become inherently robust.

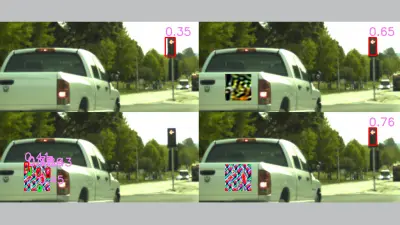

Unfortunately, DL-based perception is fooled surprisingly easily and consistently with the so-called adversarial example. More severely, physical artifact such as universal adversarial patches (where the adversary carefully chooses a pattern that it prints out and places in a scene) or semi-transparent adversarial camera stickers on the camera lens can fool object detectors in safety-critical ways. Specifically, universal patches are problematic because they are universal in the sense that once created, they can fool a perception system consistently in many different situations. Figure 1 (left) gives an illustration for the case of fooling a traffic light detector into not detecting a red traffic light. Clearly, not detecting a red traffic light can lead to catastrophic consequences. Thus, making deep-learning perception robust against such attacks is an important challenge.

Making deep-learning perception robust against such attacks is an important challenge.

Ineffective patch thanks to MAT model

Figure 1. Illustration of a digital universal patch attack against an undefended model (left) and a model defended with Meta Adversarial Training (MAT, right) on Bosch Small Traffic Lights. A patch can lead the undefended model to detect non-existent traffic lights and miss real ones that would be detected without the patch. In contrast, the same patch is ineffective against a MAT model. Moreover, a patch optimized for the MAT model (top right), which bears a resemblance to traffic lights, does not cause the model to remove correct detections.

Adversarial training: increasing robustness by simulating attacks during training

The currently most effective approach for increasing robustness of deep neural networks against such adversarial attacks is the so-called adversarial training. Adversarial training simulates an adversarial attack in every step of training and thereby trains the network to become robust to the specific type of attack. However, since every iteration of training needs to simulate the attack anew, only such attacks can be incorporated that are computationally cheap.

Adversarial training thus requires that training for robustness against cheap proxy attacks also increases robustness against more sophisticated and computationally expensive attacks. This assumption holds true for typical academic adversarial threat models. Prior work has also suggested that training against cheap proxy attacks increases robustness against universal patches and perturbations.

A false sense of robustness

Adversarial training makes current adversarial attacks for finding universal patches that fool a system ineffective. However, it remains an open question if the model is intrinsically more robust in such a way that also more advanced attacks that may be developed in the future remain ineffective. To shed light onto this, we have extended existing attacks in various ways: We changed their initialization, constrained them to low-frequency patterns, transferred them between different models, and systematically varied some of their hyperparameters. The results are telling: None of the models trained with existing adversarial training methods substantially increase perception robustness against universal patches. Apparent gains in robustness are thus due to evaluating a weak attack and not to true robustness of the neural network.

Meta Adversarial Training: efficient simulation of strong attacks by meta learning

Our hypothesis is that one can achieve true robustness against universal patches only by simulating strong attacks during training. However, those attacks are computationally expensive and using them in adversarial training is thus prohibitive. The key idea of Meta Adversarial Training (MAT) proposed by us is that one can meta-learn strong adversarial patches concurrently to adversarial training: More specifically, we still conduct a cheap adversarial attack in every iteration of training. However, we initialize this attack not randomly but from a large set of attack patterns that have been effective in previous iterations. Concurrently to training the neural network, we update this set of attack patterns via meta learning. This allows both generating strong and diverse attack patterns during training while keeping computation overhead tractable.

The key idea of Meta Adversarial Training (MAT) proposed by us is that one can meta-learn strong adversarial patches concurrently to adversarial training.

Results

Models trained with MAT achieve high robustness against universal patches and even against our large and diverse set of strong attack procedures outlined above. Figure 1 (right) gives an illustration for the case of the traffic light detector: The model trained by MAT correctly detects the red traffic light for any attack pattern tested. Moreover, also patches that have a resemblance to an actual traffic light do not cause the model to generate a false positive detection, which could have been equally harmful. A quantitive measure for evaluating the performance of object detectors, the “mean Average Precision” (mAP), drops from 0.41 to 0.09 for a standard model under a patch attack. That means: The model misses many actual traffic lights and also emits many false detections on the adversarial patch. A model trained with MAT maintains a mAP of 0.38. These results are encouraging and provide hope that deep-learning-based perception can become robust against physical-world attacks.

Check out the open source implementation of MAT available under the Bosch Research github page.