Machine learning testing – open questions and approaches

Bosch Research Blog | Post by Matthias Woehrle and colleagues, 2021-01-14

Co-authors: Christoph Gladisch, Christian Heinzemann, Martin Herrmann

Many industrial machine learning (ML) applications necessitate some level of safety, especially in the context of automated systems such as autonomous vehicles. Several papers have identified safety concerns and mitigation approaches. A good overview was detailed by our Bosch colleagues Oliver Willers, Sebastian Sudholt, Shervin Raafatnia and Stephanie Abrecht that focuses on the use of deep learning in safety-critical perception tasks. A prime mitigation approach mentioned in this work is in the testing stage.

The increased demand for the industrial use of machine learning calls for novel testing approaches to meet safety demands.

At Bosch Research, in the field of autonomous systems, we work on methods to account for safety demands and contribute towards making the use of ML safe. Our team focuses particularly on ML testing. As such, we keep up to date on the emerging research area of ML testing. Moreover, we evaluate approaches from literature on industrial examples and develop new methodologies ourselves.

In several publications over the last year, our team has provided an overview of open questions for ML testing and evaluated several concrete approaches. In the following, we will provide an overview of three papers related to this line of research.

Research questions in the field of ML testing

At the Second International Workshop on Artificial Intelligence Safety Engineering (WAISE 2019), we provided an overview of “Open Questions in Testing of Learned Computer Vision Functions for Automated Driving”. In this work, we systematically survey testing approaches and detail on 11 exemplary research questions from a practitioner’s point of view. We hope to provide exciting research directions for others in the field. One particular example of such a question is: “Which kind of coverage criterion could be used to argue exhaustiveness of a test set?” This concrete question guided the research on the two papers discussed in the following.

What are possible machine learning testing techniques?

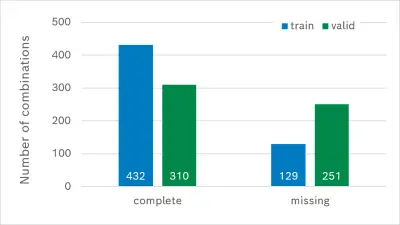

At the 2nd Workshop on Safe Artificial Intelligence for Automated Driving (SAIAD 2020), we discussed the use of a classical testing technique called combinatorial testing. Here, we demonstrated that classical approaches from software testing may also be leveraged in the domain of ML testing. Concretely, we use combinatorial testing to determine how well a given data set covers the relevant system domain. As shown in the figure, we analyzed a well-known dataset from the computer vision domain and identified where data is missing. Watch our workshop presentation for details:

Loading the video requires your consent. If you agree by clicking on the Play icon, the video will load and data will be transmitted to Google as well as information will be accessed and stored by Google on your device. Google may be able to link these data or information with existing data.

In cooperation with the Fraunhofer Institute for Intelligent Analysis and Information Systems (IAIS), we evaluated a recent ML testing approach. Concretely, we evaluated what is known as neuron coverage to help establish a measure on how well a neural network has been tested. Our empirical investigation shows that this novel approach, however, is not sufficient to argue that a neural network has been sufficiently tested.

The paper resulting from this fruitful collaboration has been awarded with a best paper award at the Third International Workshop on Artificial Intelligence Safety Engineering (WAISE 2020).

Our team continues to work in this line of research and we are currently collaborating with our partners in the public project “KI Absicherung – Safe AI for Automated Driving” funded by the German Federal Ministry for Economic Affairs and Energy on delivering safe ML-based functions.

We thank all our collaborators within Bosch and in our public projects.

What are your thoughts on this topic?

Please feel free to share them via LinkedIn or to contact me directly.

Author: Matthias Woehrle

Matthias is a researcher in the area of system and software engineering. He started to work on software verification in the context of cyber-physical systems during his doctoral studies at ETH Zurich. Matthias is passionate about applying research to practical applications in an industrial context. At Bosch, his work is applied to various domains such as embedded control systems, autonomous systems and machine perception. Recently, his research focus has been on safety and testing of machine learning systems.

Co-authors: Christoph Gladisch, Christian Heinzemann, Martin Herrmann