Will AI replace classical component simulation?

Bosch Research Blog | Post by Felix Hildebrand, 2021-01-14

Simulation engineering at its limit

With AI gaining more and more importance in product engineering, I – like many other simulation specialists in the context of materials and components – have been wondering at one point whether AI would soon replace physical-based modeling and simulation in the product development process altogether. For decades, simulation engineers and researchers like us had been faced with growing demands regarding accuracy, accelerated product cycles and increasing complexity of materials and components. These requirements have led us to keep pushing modeling and simulation beyond their limits, using ever-more refined and sophisticated multi-scale and multi-physics models and both algorithms and hardware to speed them up. However, in numerous cases, we were confronted with our limits with respect to computational performance and accuracy, e.g. when trying to describe phenomena beyond full physical understanding.

AI as a game changer?

AI seemed like a promising solution to these challenges and, at the same time, as threat to our profession: With enough data – some told the story at the height of the AI hype – machine learning approaches would be able to accurately and efficiently describe complex phenomena such as the behavior of functional materials or the failure of components. Our long-honed art of paper-and-pencil derivation of consistent physical models, the artisanship of choosing the right boundary conditions to fully capture the essence of given problems and the highly complex fine-tuning of numerical models for efficient simulation seemed old-fashioned and out of date.

Data is sparse in product engineering

However, when putting AI to the test on our problems, it was obvious almost immediately that “enough data” is usually out of scope and that fully switching from physics- to data-based modeling is not the solution for all challenges in modeling and simulation. In a context where data is extremely sparse, expensive and sometimes inaccessible, where influence parameters are abundant and in settings where products have to be designed before they physically exist and can be used to generate data, pure machine learning very quickly reaches its limits as a solution strategy. And of course, it would be completely ridiculous to leave the huge amount of formalized and non-formalized domain knowledge about the behavior of materials and components present at Bosch and in the community unused.

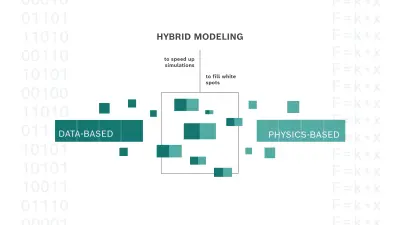

Hybrid modeling

You will not be surprised to learn that over the last years, we have confirmed that an optimal solution often lies between the two extremes: in a combination of both data- and physics-based modeling, which we now refer to as “hybrid modeling”. Even though it sounds intuitive that such combinations would outperform the individual approaches, there are countless possible ways to specify such combinations and by far not all of them work well. Here, I want to discuss two settings in the context of material and component modeling in which we have found the combination of machine learning and domain knowledge to make particular sense.

Speeding up simulations

The first setting is related to simulation speed. If, to achieve accurate predictions, your application requires huge computational resources that drastically limit treatable system sizes/time scales or related design variations and optimization, then you should check the following: Is there a basic building block within the simulation that is evaluated over and over and over? If you can identify such a building block and if, additionally, it does not depend on the particular problem solved but is shared e.g. across different component simulations, then machine learning can very likely drastically speed up your problem. An example for such building blocks are the force calculations in ab-initio molecular dynamics that depend on atom type combinations and configuration but not the particular simulation setup. Another example is the behavior of an underlying representative microstructural element in fully coupled multiscale simulations such as FE2 that depends on the material and the microstructure but not the particular component they are part of.

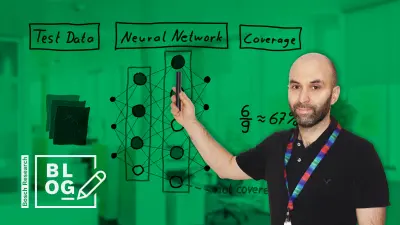

If these conditions are fulfilled, the basic approach is now to replace the relation that these blocks provide between features (e.g. atomic configuration or strain) and targets (e.g. force or stress) by a suitable regression algorithm, typically a neural network. In setting up such a network, it is crucial to take advantage of the physical structure and any invariances. Such invariances could be invariances with respect to certain operations such as rotation, translation or particle exchanges. They should be taken advantage of both when designing the architecture of the network and its features and when training the network on suitably sampled simulated data from the original building block.

Overall, we have found that these approaches can lead to speedups of two to four orders of magnitude, so that week-long simulations run within hours and accurate simulations of complex and computationally nearly inaccessible systems suddenly become feasible. In some cases, an additional benefit of the generated machine learning models can be their use for parameter identification or optimization.

Week-long simulations now run within hours thanks to a speedup of two to four orders of magnitude.

Filling white spots

The second scenario where “hybrid modeling” is often superior to both pure physics-based modeling and data-based modeling is much more diffuse and we are not (yet) aware of a general cookbook approach as sketched out for speeding up models above. This scenario occurs if there is a physical model that captures some but not all relevant mechanisms of a problem and if, at the same time, there is some but only little experimental data available. In such settings, we find that a combination of domain understanding and machine learning can often yield an improved solution and that there are two directions from which the problems can be approached:

If the starting point of the combination is a physical model that covers the general behavior but lacks a relevant mechanism and only inaccurately predicts experiments, then the available data can be used to train a correction to the model. Such a correction can either be directly added to the simulation target or can be incorporated as a correction to an internal parameter of the physical model. The main benefit we see from such approaches is the increased accuracy of the solution, the good interpretability and a reasonable generalization beyond the available data.

However, if the starting point is a data-based model that suffers from lack of data, than the physical understanding of the relevant mechanisms and features can be used for physics-based feature selection and feature engineering, both crucial in the context of small data problems. The reduced requirement of data for accurate predictions and the good interpretability of features is the main benefit of this category.

As mentioned, these problems, the related optimal hybrid modeling approaches and their benefit are very much dependent on the form and structure of the available physical models as well as the nature of the given data. In our opinion, the key in this context is to gain broad experience and to regularly exchange and distill this experience into more and more concrete approaches.

Learn from each other

Besides the technical viewpoint detailed above, we have found that the secret sauce of such hybrid modeling is bringing together both modeling and simulation experts with fundamental hands-on understanding of and curiosity for machine learning as well as machine learning experts with curiosity for physics and engineering and openness for the depths of real-world challenges. This process should be given some time – I still vividly remember how we struggled to find a common language in some of the first meetings. We are extremely lucky here at Bosch that both groups are rapidly growing and interacting with increasing intensity.

Beginning of a journey

Even though we have already seen great potential in the synergy of data and physics in a number of applications, I feel like we are only at the beginning of a journey. As we learn more and more from each other and explore the endless possibilities of combining approaches, new ideas will arise and – if we accept some failures along the way – we will come up with better and better methods and tools for product design. My prediction is that on this journey, machine learning and classical modeling will continue to beneficially complement each other for the decades to come.

What do you think? I’m very curious to hear your opinion!

Please feel free to share your thoughts via LinkedIn or to contact me directly.

Author: Felix Hildebrand

Felix is a research engineer working on computational material modeling on different scales. His current focus is on the intersection between physical modeling and artificial intelligence. The overall question in this process is how these two approaches can best be combined to form “hybrid” methods. The goal is to substantially improve our products through faster and more precise models.