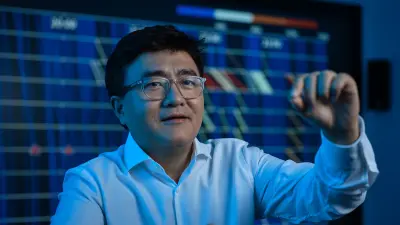

Liu Ren, Ph.D.

VP and Chief Scientist of Integrated Human-Machine Intelligence

I am the Vice President and Chief Scientist of Integrated Human-Machine Intelligence (HMI) at Bosch Research in North America. I am responsible for shaping strategic directions and developing cutting-edge technologies in AI, focusing on big data visual analytics, explainable AI, mixed reality/AR, computer perception, NLP, conversational AI, audio analytics, wearable analytics, and so on, for AIoT application areas such as autonomous driving, car infotainment and advanced driver assistance systems (ADAS), Industry 4.0, smart home/building solutions, robotics, etc. As the responsible global head, I oversee these research activities for teams in Silicon Valley (U.S.), Pittsburgh (U.S.), and Renningen (Germany). I have won the Bosch North America Inventor of the Year Award for 3D maps (2016), Best Paper Award (2018, 2020), and Honorable Mention Award (2016) for big data visual analytics in IEEE Visualization.

Please tell us what fascinates you most about research.

In the era of AI, our research in this domain is about how we combine machine intelligence with human intelligence to deliver impactful AIoT products and services with superior user experiences. AI research on topics such as mixed reality/AR, conversational AI, smart wearables, and so on could lead to customer products with a great impact on our everyday lives. In addition, tackling AI challenges in big data visual analytics, explainable AI, NLP, audio analytics, etc. can help automate lots of labor-intensive tasks for our workers and developers. The potential is very exciting to me. Our cutting-edge research outcomes can not only be presented in leading AI conferences, but more importantly, are tangible. They are unique selling points (USPs) that enable our fascinating products to succeed in our business areas — including autonomous driving, advanced driver assistance, smart home/buildings, car infotainment, smart manufacturing, robotics, and so on.

What makes research done at Bosch so special?

First of all, Bosch has a global setup. Working in our research unit in Silicon Valley, the hub of AI and software innovations in the world, gives our researchers an opportunity to engage the Silicon Valley eco-system to identify and shape early trends, work with professors from top level universities such as Stanford and UC Berkley to advance core research, and also drive innovation in addressing emerging and undiscovered business needs to impact the world and lead Bosch to the future. Apart from a global setup, Bosch also has a diversified product portfolio that enables our researchers to drive innovation in building customer-centric and market-driven sustainable AI solutions, leading to a real-world impact that goes beyond striving for excellent scientific impact. However, this does not mean we focus only on the short-term. Bosch is in a unique position to commit to long-term research that fits with our company strategy because we are a private company that is much less influenced by fluctuations on the stock market.

What research topics are you currently working on at Bosch?

Research is about breadth and depth. While the research area of integrated human-machine intelligence (HMI) has a broad scope, different research applications in this field share something in common. Most of them need to deal with domain-specific AI technologies and user experience requirements. As the responsible Global Head, I mainly work closely with my global teams to develop research strategies and roadmaps for different AIoT topic areas. A challenging task that needs a deep understanding of technology trends and limitations, market situation, business needs, and resource constraints. As Chief Scientist, I focus my research more on visual computing, a domain-specific AI topic area that is closely related to computer vision, computer graphics, visualization, and machine learning — which also happens to be my favorite research topic. My recent research focus includes big data visual analytics, explainable AI, mixed reality/AR, 3D perception, and smart wearables that are a core part of key products and services in autonomous driving V&V, cloud-based retail analytics, smart car repair assistance, car infotainment, smart measurement tools, and Industry 4.0. In particular, together with my team and partners, I helped shape the visual analytics and explainable AI research in AIoT directions in the research community, and won three best paper (or honorable mention) awards at top CS conferences for this domain for Bosch Research recently.

What are the biggest scientific challenges in your field of research?

I see three major challenges in our research for AIoT. All are related to the scale-up needs of typical AIoT products and services. Firstly, truly understanding users is the key to enabling a superior user experience for AIoT products (e.g. smart speakers) for large scale deployment. This is still a long-standing research problem, as it is still very challenging to accurately understand users’ intentions, behavior, emotions, etc. from different input modalities such as speech, audio, gesture, and visuals. Second, figuring out how to enable intuitive UX for AIoT products and services in the wild still remains a big challenge. Most existing solutions work relatively well in controlled environments (e.g. AR systems in quiet indoor environments) but lack robustness or scalability in uncontrolled environments (e.g. noisy outdoor environments), which negatively affects the usability of some of the existing solutions for wider adoption. Finally, in addition to superior UX, trustworthy aspects could be another factor that hinders the widespread adoption of an AIoT product or service, as most AI systems run like a black box. Leveraging human intelligence to improve the interpretability and robustness of an AI system could help here. For example, visual analytics that combine representation learning (e.g. XAI), data visualization, and minimum user interaction is considered a promising approach to addressing this problem.

How do the results of your research become part of solutions “Invented for life”?

For the results of our research to become part of solutions “invented for life”, they must have real-world impact. One earlier highlight is 3D artMap. As the world’s first artistic 3D map for navigation, 3D artMap highlights map importance with artistic looks for easy orientation and a personalized navigation experience. It has been adopted as a part of the automotive industry standard and is currently being used in several in-car navigation products. Another example is Bosch Intelligent Glove (BIG), a recent highlight in the Industry 4.0 domain. BIG is a smart sensor glove that can improve production quality and efficiency, thus reducing manufacturing costs based on our unique fine finger motion recognition and analysis algorithms. In addition to the successful SOP in China, BIG won “The World’s Top 10 Industry 4.0 Innovation Award” from the Chinese Association of Science and Technology recently. It was honored alongside Industry 4.0 innovations from major global organizations such as Siemens and GE. Finally, most of our recent research on visual analytics and explainable AI not only impacted our scientific community (e.g. three recent best paper or honorable mention awards at the top computer science conference for this field), but also became operational for our core AIoT applications focusing on mobility, consumer goods, and smart manufacturing.

Curriculum vitae

Since 2006

CS Ph.D. graduate, vision-based performance interface, machine learning for human motion capture, analysis, and synthesis, Carnegie Mellon University (USA)

2001

CS Ph.D. intern, computer graphics, real-time rendering, and scientific visualization, Mitsubishi Electric Research Laboratories (USA)

1999

CS MS. graduate, AI-assisted computer aided design, Zhejiang University (China)

Selected publications

L. Gou et al. (2020)

- L. Gou, L. Zou, N. Li, M. Hofmann, A. K. Shekar, A. Windt, L. Ren

- EEE Visualization (VAST) (2020)

- IEEE Transactions on Visualization and Computer Graphics 2021

- Best Paper Award

L. Ren (2020)

- Bosch Digitial Annual Book 2019

L. Ren (2019)

- Auto.AI USA 2019

- Opening Keynote

Y. Ming et al. (2019)

- Y. Ming, P. Xu, F. Cheng, H. Qu, L. Ren

- IEEE Visualization (VAST) (2019)

- IEEE Transactions on Visualization and Computer Graphics ( Volume: 26, Issue: 1, Jan. 2020)

Get in touch with me

Liu Ren, Ph.D.

VP and Chief Scientist of Integrated Human-Machine Intelligence