Self-driving cars: lights, camera, action!

Matthias Birk and Andreas Schulz are teaching vehicles to see. The camera for self-driving cars they helped develop uses AI to ensure more reliable object recognition. Their job is at the crossroads of mind-bending theory and eye-popping practice.

Realizing the vision of autonomous cars

A clear idea of the present can help point the way forward. At least in the case of Matthias Birk and Andreas Schulz, two Bosch engineers who are working on a multi purpose camera. Known as MPC3, it enables vehicles to recognize their surroundings more quickly and reliably than ever before. The camera is helping solve current problems with surround sensors — and in doing so, propelling the development of automated driving forward.

The new MPC3 multi purpose camera — better than the human eye

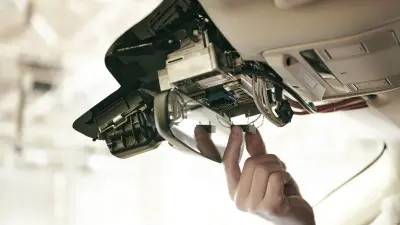

Together with colleagues, the project managers Birk and Schulz coordinated the 20 or so teams that were involved in the camera’s development. But these teams haven’t only combined a lot of knowledge. They are also jointly responsible for a daunting task. “We want to make the car a better driver. This is why the camera-based technology has to work more reliably than people,” Birk says. The MPC3 already does this: “Just because people look, it doesn’t necessarily mean they grasp what they’re seeing. This is where we speak about ‘looked-but-failed-to-see errors,’” Schulz says. By contrast, the video camera mounted behind the windshield between the headliner and rear-view mirror sees things very clearly indeed, triggering the appropriate response in an instant. And it never tires. Even after a long drive, it remains as alert and responsive as it was during the journey’s first few kilometers.

“We no longer need road markings to keep a self-driving car safely on track.”

Drives safely, brakes on its own

Vibrant shapes and surfaces flicker across the dashboard monitor as Birk and Schulz demonstrate the camera’s functions on the test track at the Bosch location in Renningen. The multi purpose camera scans the road, processing information about what it sees with remarkable speed. Responding to an obstacle in its path, the car brakes hard.

An AI-enabled milestone

The MPC3 marks a major stride toward autonomous vehicles, an advance impelled mainly by artificial intelligence. To develop the camera, the Bosch team took a multi-path approach. Its engineers and programmers created a software architecture that combines conventional image-processing algorithms with AI-driven methods, and embedded it on a high-performance system-on-chip (SoC) with an integrated microprocessor. “This enables peerless scene understanding and reliable object recognition,” Schulz says.

Three paths to a reliable result

Measuring 12 by 6.1 by 3.6 centimeters, the MPC3 employs three paths to identify objects.

Path 1: The classifier

The first path is the conventional approach already in use. With the help of machine learning, classifiers are trained to recognize and classify specific objects such as vehicles and pedestrians.

Path 2: The optical flow

In the second path, the camera taps into the optical-flow and structure-from-motion (SfM) imaging to detect raised objects bounding the road, such as curbstones. It computes three-dimensional structures based on corresponding points in images, the motion sequences of which are tracked.

“What’s more, the images’ resolution is higher than ever. Instead of roughly one megapixel, we now have roughly 2.5. This allows us to detect objects even earlier and more precisely,” Birk says. The camera’s angle of view has also been widened to around 100 degrees. “This is more than we humans can normally see sharply with a focused gaze,” he says.

Path 3: Artificial intelligence

The third path puts artificial intelligence to good use. For another first in object detection, this new generation of multi purpose camera can distinguish the roadway from the shoulder or identify discrete objects.

It uses neural networks and semantic segmentation that assigns each pixel of an image to a defined category. This is a huge advantage. “We no longer need road markings to keep a self-driving car safely on track,” Schulz says. By combining all three detection paths, errors can be minimized.

The ace in the hole — an agile project framework

Keeping things on track was very much on the managers’ minds during this challenging project’s development phase. “We want to be able to react quickly to changes,” Schulz says, adding: “That’s why it’s important for us to have a lot of flexibility in how we work.” A contact person in each team allows the project managers to coordinate teams working independently of each other. Even so, no day can be planned with total precision, which makes the job both exciting and challenging. “There is no master plan for the technology we’re working on; practically everything about it is uncharted territory,” Birk says.

Leading the effort to usher in autonomous vehicles

“As an engineer, it’s great to be able to work in such proximity to the real world to make the lives of many people safer and less stressful,” Birk says. Schultz seconds that notion: “Our work has a direct impact on the everyday lives of many people, which is motivating.

It’s a privilege to be able to play a leading role in co-creating the future at such an early stage in our careers.” The next milestone on the road to the self-driving system — the fourth generation of the multi purpose camera — already awaits the duo’s attention.

Profile

Matthias Birk and Andreas Schulz

Project managers, Function and Algorithms, Video Perception, Cross-Domain Computing Solutions, Bosch

We innovate. That means being open to every sort of change, every day.

Matthias Birk and Andreas Schulz graduated from the Karlsruhe Institute of Technology (KIT). Birk studied electrical engineering with a focus on system-on-chip technology. Schulz majored in mathematics with a minor in computer science. After interning at the Bosch Research and Technology Center in Palo Alto, California, Birk earned a doctorate at KIT with his work on accelerated medical image reconstruction using parallel heterogeneous hardware platforms. Schulz opted to do his PhD in image processing at Bosch, where he is now working to chart the future of driving.